A well-known player in the Internet advertising industry asked VIAcode to help one of their clients perform complex data integration of analytics data from AWS to Azure.

The Problem

The client was looking for an automated solution that would allow them to provide segmentation to advertising data accumulated for their different products. For that purpose, development of a new system for migration of telemetry data from AWS to Azure was required. This system would need to provide further data segmentation based on various parameters using analytic tools and machine learning technologies.

Requirements

The requirements of this high-load (the expected load was 860 million requests and 190 Gb per month) system are:

- Great performance

- Ease of maintainability

- Simple configuration of segmentation rules

- Implementation of a robust monitoring solution

To deliver this system along with the documentation and system usage training classes, a team of four people – developers and DevOps specialists – and two months of work were required.

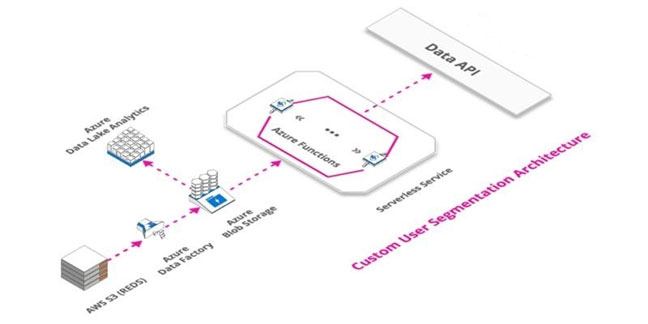

Building the Solution With Azure Data Factory and Data Lake

The client wanted to build the solution on top of Azure Data Factory (ADF) uploading data into an Azure Data Lake storage daily. This data is then available for analytic purposes using Data Lake Analytics. To apply data segmentation rules and transfer the segmented data to Data API, Azure Functions will be used.

Proposed solution

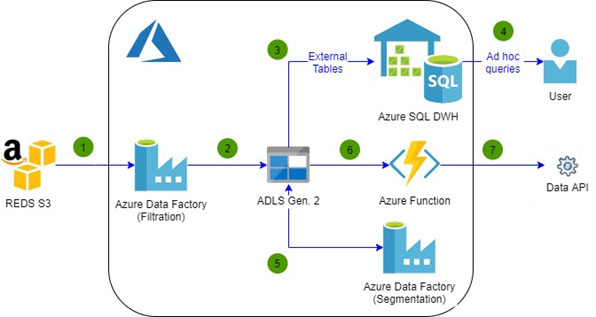

VIAcode engineers revised the proposed design and suggested the following changes aiming to improve performance and ease the system maintenance while keeping the system complexity and costs as low as possible.

- Replacing Azure Data Lake Analytics (ADLA) with Azure SQL Data Warehouse (Azure SQL DWH) since ADLA doesn’t support Azure Data Lake Storage Gen2 that was released after the initial draft of the system design had been created.

- Usage of Azure Data Factory instead of Azure Functions for data segmentation. Processing data in Azure Functions is not optimal; required operations are better performed using map-reduce or SQL-like technologies.

- A new solution to update the set of heuristic segmentation rules in production on the fly with no change to the existing infrastructure or source code.

- Usage of Azure Monitor Alerts – to perform monitoring of the whole solution, including Azure Data Factory.

Benefits of our solution

The design offered by VIAcode is also based upon Azure Data Factory. Data is uploaded into Azure Data Lake Storage Gen2 after initial processing and becomes accessible in raw format for analyzing in Azure SQL Data Warehouse, so analysts can use T-SQL queries in their work with no need to study a new solution.

At the same time, the data that has just been stored in the Data Lake storage is processed by the dedicated ADF pipeline that performs segmentation based on the existing rules. Once segmentation is complete, the data is sent to Data API by a component built with Azure Functions.

Deployment of the solution was implemented through Azure Pipelines so it could be deployed and redeployed with ease in any environment on Azure. This allowed the customer to promptly create an instance of the solution for development or testing purposes in just one click.

The Results

VIAcode quickly provided a robust solution that achieved the stated goals:

- Performance. The overall system performance was improved by 50% because of migration of the segmentation logic from Azure Functions to Azure Data Factory, while maintenance costs were cut in half.

- Maintainability.

- The solution is based on services and solutions native to Azure Cloud Platform and covered by monitoring with Azure Monitor.

- No changes are required in the existing infrastructure or source code when updating the set of segmentation rules.

- Azure Pipelines CI/CD is configured so that the entire solution can be deployed in any Azure subscription with just one click.